Goal

I wanted to share the early lessons learned in using PyTorch models in LiveBook. Hopefully, these hints will save folks some time as they explore calling PyTorch models from Elixir notebooks.

Starting from the Hugging Face model card

Inspired by the samrat.me blog post by Samrat, I tried to repeat the example on my local Ubuntu server with 11G Nvidia 3080. Let’s start with the code example on the Hugging Face model card. If we can get the example working, then we can further explore the edge conditions we can expect in using a PyTorch Transformers based model.

Confession, after not paying close attention to the Python code in Samrat’s blog post, I quickly ran into problems when I tried to run the code from his blog post on my Ubuntu server. Samrat was using a Mac computer. Mlx-vlm library is specific to Mac computers. So I looked up the SmolVLM model card on Hugging Face. The SmolVLM-instruct from HuggingFaceTB is the model that I chose to run on an Ubuntu server.

Dependency version is not required

I had to ask for help in understanding how dependencies work in Pythonx. Thank you Jonatan. The intent is to align the requirements.txt format. Therefore, you can just list “library_a”, “library_b” in the dependency section.

At this time, Hugging Face Accelerate library doesn’t auto-magically support MLX for Mac Metal. That is the reason Samrat pulled in the mlx_vlm library and a specific transformers library release. For linux, we can use the most current transformers version.

Mix.install([

{:pythonx, "~> 0.3.0"}

])

Pythonx.uv_init("""

[project]

name = "project"

version = "0.0.0"

requires-python = "==3.10.*"

dependencies = [

"torch",

"transformers",

"pillow"

]

""")

Pythonx.eval(

"""

import torch

from PIL import Image

from transformers import AutoProcessor, AutoModelForVision2Seq

from transformers.image_utils import load_image

from functools import reduce

DEVICE = "cuda" if torch.cuda.is_available() else "cpu"

# Load images

image1 = load_image("https://cdn.britannica.com/61/93061-050-99147DCE/Statue-of-Liberty-Island-New-York-Bay.jpg")

image2 = load_image("https://huggingface.co/spaces/merve/chameleon-7b/resolve/main/bee.jpg")

# Initialize processor and model

processor = AutoProcessor.from_pretrained("HuggingFaceTB/SmolVLM-Instruct")

model = AutoModelForVision2Seq.from_pretrained(

"HuggingFaceTB/SmolVLM-Instruct",

torch_dtype=torch.bfloat16,

#_attn_implementation="flash_attention_2" if DEVICE == "cuda" else "eager",

).to(DEVICE)

# Create input messages

messages = [

{

"role": "user",

"content": [

{"type": "image"},

{"type": "image"},

{"type": "text", "text": "Can you describe the two images?"}

]

},

]

# Prepare inputs

prompt = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs = processor(text=prompt, images=[image1, image2], return_tensors="pt")

inputs = inputs.to(DEVICE)

# Generate outputs

generated_ids = model.generate(**inputs, max_new_tokens=500)

generated_texts = processor.batch_decode(

generated_ids,

skip_special_tokens=True,

)

print(generated_texts[0])

""",

%{}

)

Pythonx.eval(

"""

import torch

from PIL import Image

from transformers import AutoProcessor, AutoModelForVision2Seq

from transformers.image_utils import load_image

from functools import reduce

DEVICE = "cuda" if torch.cuda.is_available() else "cpu"

# Load images

image1 = load_image("https://cdn.britannica.com/61/93061-050-99147DCE/Statue-of-Liberty-Island-New-York-Bay.jpg")

image2 = load_image("https://huggingface.co/spaces/merve/chameleon-7b/resolve/main/bee.jpg")

# Initialize processor and model

processor = AutoProcessor.from_pretrained("HuggingFaceTB/SmolVLM-Instruct")

model = AutoModelForVision2Seq.from_pretrained(

"HuggingFaceTB/SmolVLM-Instruct",

torch_dtype=torch.bfloat16,

#_attn_implementation="flash_attention_2" if DEVICE == "cuda" else "eager",

).to(DEVICE)

# Create input messages

messages = [

{

"role": "user",

"content": [

{"type": "image"},

{"type": "image"},

{"type": "text", "text": "Can you describe the two images?"}

]

},

]

# Prepare inputs

prompt = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs = processor(text=prompt, images=[image1, image2], return_tensors="pt")

inputs = inputs.to(DEVICE)

# Generate outputs

generated_ids = model.generate(**inputs, max_new_tokens=500)

generated_texts = processor.batch_decode(

generated_ids,

skip_special_tokens=True,

)

print(generated_texts[0])

""",

%{}

)

Python print()

The print(generated_texts[0]) result appears below the cell, just like IO.inspect or other Elixir output to the stdout.

Transformers supports Flash Attention 2 but my GPU does not

Flash Attention 2 is only available on enhanced capability GPUs. For consumer GPUs, only the 3090, 4090 and 5090 are capable of Flash Attention. Modern server GPUs will support flash attention. The line related to attn__implementation needs to be commented out. Even though CUDA is available on a standard commercial GPU, Flash 2 isn’t supported.

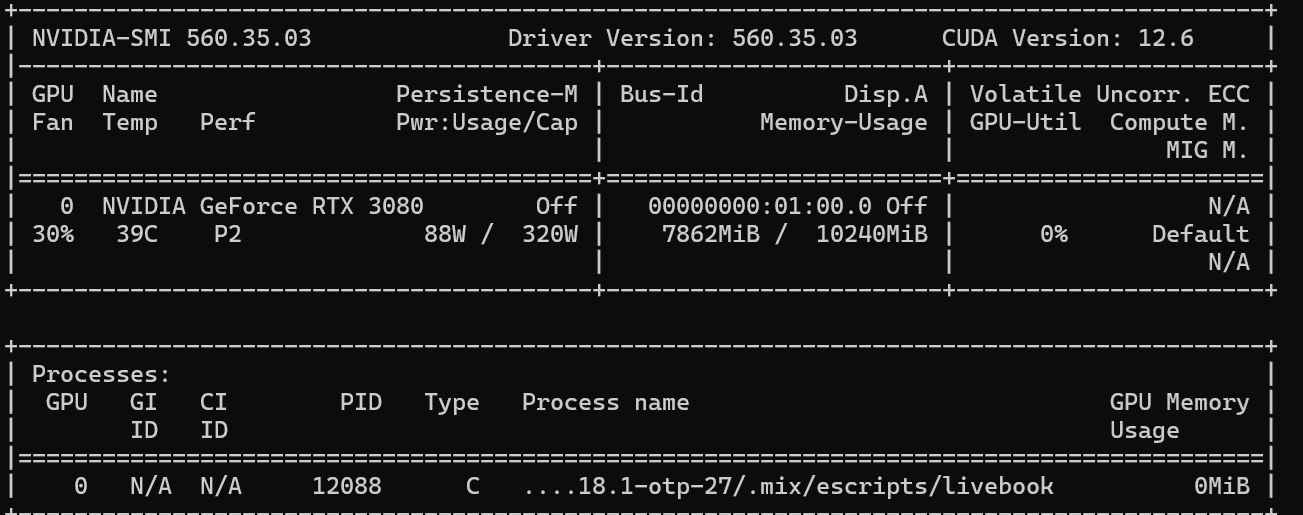

VRAM management on GPUs is always a thing

As usual when working with GPU accelerated notebooks, you need to be careful with running two notebooks side by side. It is easy to get a CUDA out of member error when you have a model that takes up more than half of the GPU VRAM. SmolVLM with two input images and bfloat16 uses 7.9GB of VRAM. You can see this with the nvidia-smi output.

Using up over half the VRAM

One gotcha, when I ran the last cell twice, I received a CUDA out of memory error. I have a solution in the next section

Try the notebook

Checkout the livebook code is on my Github repository. SmolVLM_on_linux.livemd I’d be pleased to accept pull requests if you find an issue.

Repeating Samrat’s code

Now that we’ve demonstrated using the Python code from the model card, it’s time to repeat Samrat’s code. Be sure to close the existing notebook. Samrat demonstrated holding on to the model in Pythonx’s global variable. By holding on to key global variables, we can repeatedly call the model in a manner similar to a “server” call.

CUDA out of memory errors

The current Pythonx examples show the first call to Pythonx.eval receives an empty Elixir map and returns a {result, global} tuple. That approach can work for Python libraries that don’t change global Python state. Following that pattern and the pattern in Samrat’s blog post, the first Pythonx.eval is passed an empty Elixir map and is used for the first set of global variables.

The first notebook I built had more code that just loading the SmolVLM model. I found that I needed to debug the Python code to see where things were failing. Each time I made a modification to the python code, I re-ran the cell. When re-running the cell, a new model is loaded onto the GPU without freeing the GPU VRAM from the first model load. Each time I did this, I had to close the notebook to free the GPU VRAM.

globals trick for reevaluating cells that load models in LiveBook

By defining globals in a separate cell before the one that loads the model, we allow all of the cells to be reevaluatable. The globals is passed in at the bottom of the cell and the new value is returned to the same, or different, Elixir name. This is immutable Elixir so the original globals are gone and the name points to new data. The old reference to the GPU VRAM is released and the model parameters are reloaded loaded on the GPU. It avoids the CUDA out of memory that plagued my development of this notebook. But it is time consuming to keep loading models onto the GPU. Consider using a separate cell for just loading the model(s) onto the GPU.

globals = %{}

{_, globals} = Pythonx.eval(

"""

import torch

from PIL import Image

from transformers import AutoProcessor, AutoModelForVision2Seq

from transformers.image_utils import load_image

DEVICE = "cuda" if torch.cuda.is_available() else "cpu"

# Initialize processor and model

processor = AutoProcessor.from_pretrained("HuggingFaceTB/SmolVLM-Instruct")

model = AutoModelForVision2Seq.from_pretrained(

"HuggingFaceTB/SmolVLM-Instruct",

torch_dtype=torch.bfloat16,

).to(DEVICE)

""",

globals

)

Python funcions needed in another cell must be global variables

# Pass in the existing global variables and return with the added variables

{_, new_globals} = Pythonx.eval(

"""

def describe_image(image_url):

messages = [

{

"role": "user",

"content": [

{"type": "image"},

{"type": "text", "text": "Can you describe the images?"}

]

},

]

prompt = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs = processor(text=prompt, images=[image_url], return_tensors="pt")

inputs = inputs.to(DEVICE)

# Generate outputs

generated_ids = model.generate(**inputs, max_new_tokens=500)

generated_texts = processor.batch_decode(

generated_ids,

skip_special_tokens=True,

)

return generated_texts[0]

""",

# Passing the globals from the previous cell into this cell

globals

)

bfloat16

float16 or bfloat16 should be available on Nvidia 20xx and higher GPUs. I don’t know of any model degradation issues when running f16 or bf16. If you have an NVidia GPU, always try to run at 16 bits instead of float32. Huggingface’s Accelerate has integrations with other GPU vendors, but I haven’t verified whether this notebook will work with them.

Also, I received some feedback from a PyTorch expert, in Pythonx use bfloat16. The only reason to use float16 is for Google’s Colab. In Elixir, we aren’t constrained by Colab.

Defining a Python function

The eval defines a new Python function and recieves the current global variables. The function is added to the global variable state and returned to Elixir

# Pass in the existing global variables and return with the added variables

{_, new_globals} = Pythonx.eval(

"""

def describe_image(image_url):

messages = [

{

"role": "user",

"content": [

{"type": "image"},

{"type": "text", "text": "Can you describe the images?"}

]

},

]

prompt = processor.apply_chat_template(messages, add_generation_prompt=True)

inputs = processor(text=prompt, images=[image_url], return_tensors="pt")

inputs = inputs.to(DEVICE)

# Generate outputs

generated_ids = model.generate(**inputs, max_new_tokens=500)

generated_texts = processor.batch_decode(

generated_ids,

skip_special_tokens=True,

)

return generated_texts[0]

""",

# Passing the globals from the previous cell into this cell

globals

)

The calls to describe_image do not add any new entries to the python global variables so we can ignore the returned globals

{desc1, _unchanged_globals} = Pythonx.eval(

"""

describe_image("https://cdn.britannica.com/61/93061-050-99147DCE/Statue-of-Liberty-Island-New-York-Bay.jpg")

""",

new_globals

)

description1 = Pythonx.decode(desc1)

Try this more robust notebook

The livebook code is on my Github repository. SmolVLM_server_model_separate_cell.livemd I’d be pleased to accept pull requests if you find an issue.

Further work

The obvious deficiency of this notebook version is that the Python code pulls directly from the internet. To be useful in Elixir, we need to pass the image pre-processed in Elixir into the PyTorch model and get the same instruction response. I’ll be working on that notebook next.